Some tools can be more productive than others. Throughout our experience in implementing an optimal machine learning pipeline in production, we have learned to appreciate the raw strength of the combination of SAP HANA with SAP Data Services. The amount of time that can be saved by reformulating the approach and optimizing it to use this combination is significant, compared to a vanilla approach involving usage of Python for data wrangling, cleaning, discovery, and normalization, which are significant aspects of machine learning pipeline development.

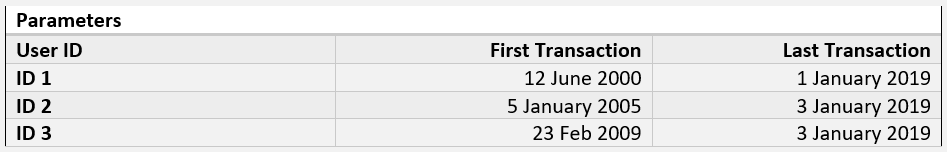

Consequently, we learned to split tasks between the tools depending on the requirements, in order to utilize each tool to its maximum potential and minimize the time required to run the pipeline. One of the strengths of SAP Data Services is its ability to run Python scripts, which are extremely powerful, for they enable us to integrate our full pipeline with modifications run on SAP HANA with Python. In the next part, we will demonstrate the time required to perform the same task between SAP HANA and Python. This is for one stage only; this task features the following parameters:

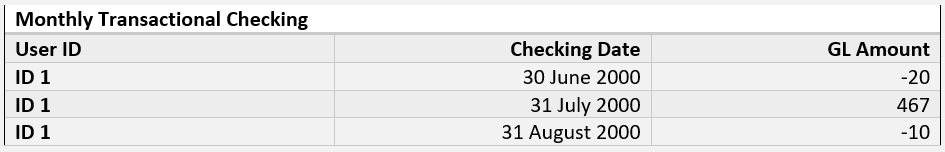

The script should feature the following parameters table and generate a monthly aggregation of each user’s behavior, converting it to a table as shown below.

With this method, we can check the end of each month to discern whether the user is indebted. As we possess data for roughly 180,000 accounts, this script would take time since each user would have made numerous transactions extending to a maximum of nine years.

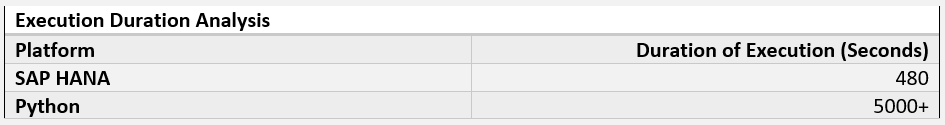

The table below provides a comparison between the time required to execute this task on Python vis-a-vis SAP HANA. Please note that Python offers multiple approaches to complete this task. The first method involves appending to the SAP HANA table directly using the HDBCLI library. However, given the amount of data being processed, this approach would be rather time-consuming.

Consequently, we will use the second method of appending to a comma-separated values (CVS) file using the PANDAS.tocsv() function. This method is faster, though it should be mentioned that we are not including the time needed to load from the CSV to the table. We are only accounting for the time required for the script to output the results.

As shown above, we observed that Python required 5000 seconds for this single process, whereas it took SAP HANA a mere 480 seconds! We understood this was unacceptable due to several issues that emerge when dealing with large volumes of data in Python. Some of these are the following:

The volume of data retrieved is too large to store via memory, therefore it must be stored in batches. Yet whenever this is done, we incur overhead time added to our execution due to the SELECT statements being made using the connection. Consequently, this is a double-edged sword.

If a multi-processing approach is employed, we encounter the issue of running multiple large SELECT statements from SAP HANA, which requires more time to execute. Multi-processing also eliminates the ability to load data directly into SAP HANA using INSERT statements with HDBCLI. Therefore, we would need to output the data into separate files before merging them, as we cannot have two processes writing into the same file concurrently. Moreover, we encounter the same memory bottle neck. These multiple processes require programming in order to keep the memory in consideration, therefore preventing the script from crashing. This adds yet another layer of complexity.

Though we employ Data Types Optimization on Pandas to reduce the amount of time required to execute, there is still sluggishness given the merger between transactional data and dimensional/feature data.

Most important are the management and maintenance-related problems arising from situations involving large Python scripts, such as the above, which involve numerous data manipulations. One important issue that can arise involves an error occurring in an iteration at the row 3,000,000, for example. It is very difficult to maintain errors such as these and deal with them.

These issues required an optimal solution that would be superior to the vanilla Python approach. We needed to shift our implementation methodology to utilize SAP HANA and SAP Data Services more. We were surprised by how easy this was and how strong these tools were in combination, to the point that our pipeline is now almost fully implemented on these tools. The pipeline is currently about 90% complete; it utilized the three platforms together in an efficient manner and saved us a significant amount of time that would have been wasted on managing scripts.

Furthermore, the pipeline was maintained with an approach involving clear stages, so if we encountered an error or a problem at one stage, we could immediately target it and attend to the problem without affecting the others.

With this said, it should be remembered that Python is still very powerful in specific cases, with some of them being:

Performance of customized or difficult logic modifications to small parts of the data. Such as the re-distribution technique we introduced in the previous article.

The actual machine learning model deployment and management.

Scraping data/gathering data from the web, a matter that we will discuss in-depth in another article.

The following diagram shows the implementation of the pipeline (“In Progress”) on SAP Data Services:

You can immediately notice this looks significantly better than a script management method. We can see each unique step, and what it does. You can also drill down to see the fixed inputs and outputs of each stage. Therefore, if a stage required any modifications, these can be performed independently on this stage alone. So long as the output isn’t affected, the following stage would not usually require modifications. In terms of management, this is one of the most effective methods for implementing data manipulations for machine learning purposes.

In summary, the change in our approach afforded us numerous benefits, which included:

Saving large amounts of development and maintenance time by greatly reducing the execution time of the pipeline.

Simplifying the look and feel of the pipeline by using SAP Data Services.

Retaining the same strengths afforded to us using Python, as all the coding performed on SAP HANA is done using Structured Query Language (SQL).

Does SAP HANA deserve the crown as the king of data science implementations? Let us know your opinions in the comments below. We will not discuss the details of each stage of the implementation as this is beyond the scope of one article. We plan to release a lengthened series detailing each stage of our approach so stay tuned!